Many computer processes use different time units than the ones many people encounter on a daily basis. Take a CPU cycle, for example, and say it takes 0.3 nanoseconds for each one. Now try to compare that to a rotational disk with a rated latency of 1-10 milliseconds. The CPU cycle is a lot faster, but to more easily grasp the speed difference, it can be useful to start with a different baseline. That is exactly what a company called TidalScale did when presenting its research during a lecture several years ago.

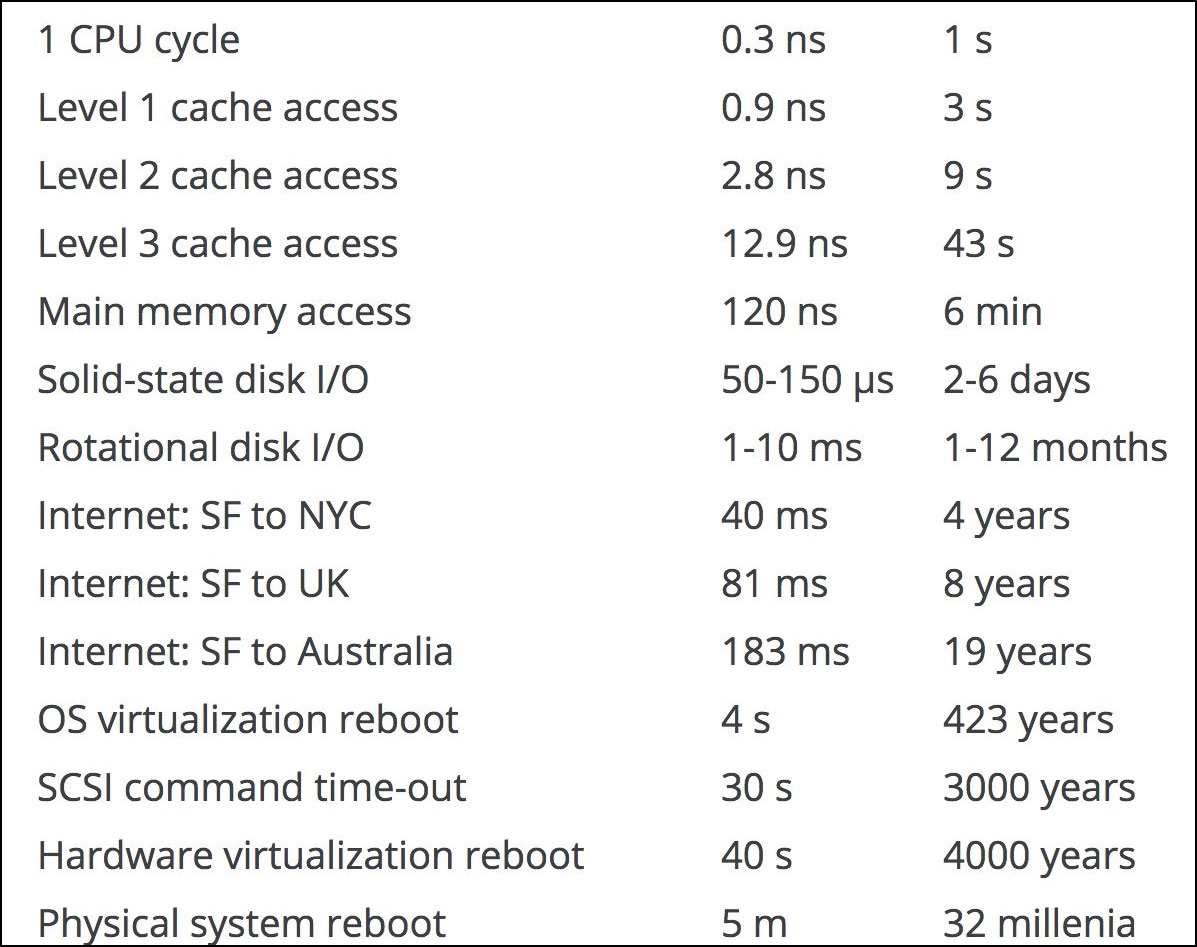

Unfortunately the full lecture that TidalScale presented isn’t available online, but the slides the company used are. A user on the Resetera forums plucked one of the slides and posted a chart that translates average times for various computer operations into so-called “human” time, starting with converting a single CPU cycle at 0.3 ns to 1 second.

“The ‘0.3 ns = 1 second’ isn’t a formula, that’s the conversion scale they’re using. It’s saying that, if 1 CPU cycle is 0.3 ns in length, here are relative values for other operations on the left hand side. And on the right hand side is the conversion, i.e. ‘let’s assume 1 CPU cycle was 1 second long, instead of 0.3 ns long, here’s how long those other operations would take in comparison,” the user explains.

By getting rid of prefixes like “nano,” and “milli” and starting with a more common baseline, it can be a little easier to understand how fast or slow certain processes are, relative to each other. For example, converting a single CPU single to 1 second, a rotational disk with a 1-10 ms latency converts to 1-12 months. That’s not saying it take anywhere near a month (or a year), but it does illustrate the speed gap.

Here is a look at some others:

Looking at the chart and accepting the original values as listed, a 4-second reboot of an OS in a virtualized environment compared to a single CPU cycle at 0.3 ns extrapolates to 423 years separating the two, if converting the CPU cycle to a single second. Obviously it doesn’t really take centuries to boot an OS, but the conversion does help understand how much slower it is, compared to a CPU cycle.

Where this is perhaps most helpful is in looking at the various levels of cache, in relation to main memory. The conversions show why cache can play such an important role in speeding up performance.

Anyway, it’s something neat to keep in mind the next time you’re looking over hardware specs.