Nvidia just announced it’s newest Titan graphics card at the NIPS 2017 conference, the Titan V. NIPS stands for Neural Information Processing Systems, and the focus is on AI and deep learning. Not surprisingly, the Titan V will do very well in those areas, and while it’s ostensibly a card that can be used for gaming purposes, I don’t think that many gamers are going to be willing to part with a cool $3,000 for the privilege.

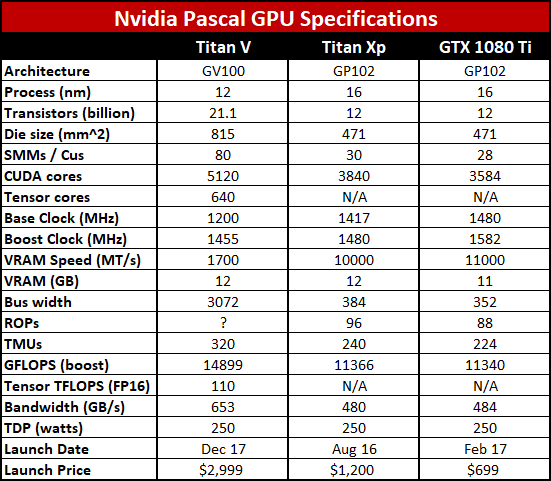

That’s more than twice the cost of the current champion, the Titan Xp—what could possibly warrant such an expense? Simple: lots and lots of compute power, thanks to the Volta GV100 architecture’s Tensor cores, and backed by 12GB of HBM2 memory. Here are the full specifications for the new behemoth, with the Titan Xp and GTX 1080 Ti provided for contrast.

Even ignoring the deep learning Tensor cores, this is a beast of a graphics card. With 5120 active CUDA cores, this by far the highest performing graphics chip from Nvidia—and there are still four disabled SMM clusters in this implementation of the GV100 architecture. Clockspeed is slightly lower than on the Titan Xp, and quite a bit lower than the 1080 Ti, but the added cores more than make up for that.

In raw numbers, the GPU portion of the Titan V can do 14.9 TFLOPS of single-precision FP32 computations, which is 31 percent more than the Titan Xp. Though do note that the reported boost clocks are conservative estimates—the 1080 Ti for instance runs at closer to 1620MHz in most of my gaming benchmarks.

This is also the first pseudo-consumer graphics card from Nvidia to utilize HBM2 memory, but only three of the potential four HBM2 stacks on GV100 are active. With a memory clockspeed of 1.7Gbps and a 3072-bit memory interface, that’s good for 653GB/s of bandwidth.

But the real power of the Titan V, at least insofar as deep learning and AI calculations are concerned, comes from the 640 Tensor cores. There are eight special-purpose Tensor cores per SMM cluster, and each Tensor core can perform a 4×4 FMA (fused multiply add) operation per cycle. That’s 64 FLOPS for the multiply, and another 64 FLOPS for the addition, so 128 FLOPS total. Multiply that by 640, and it looks like the Tensor cores run at a slightly lower clockspeed, as Nvidia reports a total of 110 TFLOPS—that would be around 1340MHz, though Nvidia might be including other factors here as well.

Utilizing the Tensor cores will require custom code—it’s not something that you’ll immediately benefit from in games—so this is definitely not intended to be the ultimate gaming graphics card for next year. In fact, I suspect we’ll see something like a GV102 core at some point that completely omits the Tensor cores, perhaps even sticking with GDDR5X or GDDR6 memory instead of HBM2, but that it could be some time before such a product materializes.

Architecturally, Nvidia has also stated in the past that Volta isn’t just Pascal with the addition of Tensor cores. The architecture has been changed in terms of thread scheduling and execution, memory controllers, the instruction set, the core layout, and more. But despite the massive die size and gobs of computational power, it’s impressive that the Titan V remains a 250W part. That bodes well for the true consumer Volta parts that we’ll eventually see some time in 2018.

Or if you’ve got $3,000 burning a hole in your wallet, you can buy the Titan V on Nvidia’s site right now. Hell, why not buy several and slap them in a system for some serious computational power? Just don’t plan on SLI, as there are no SLI connectors. Not that you’ll need those when you’re building an AI to take over the world.